How can you design autonomous systems to be transparent and always open to human intervention? This is the question that eight post-docs will spend two years working on.

‘Computer says no.’ About ten years ago, the makers of the TV series Little Britain signalled the advent of artificial intelligence in the service industry. In those days, artificial intelligence was also dubbed artificial incompetence because of the incomprehensible decisions made by the software systems, or because of automatic carts getting stuck in the complexity of the traffic. It was funny. But that was then.

Two years ago, the VSNU (Association of Universities in the Netherlands) launched the Digital Society research programme in answer to the omnipresent digitisation that was unsettling society. Then-Rector Magnificus Karel Luyben contacted Prof. Inald Lagendijk (Computing-based society, EEMCS) and Prof. Jeroen van den Hoven (Ethics of information technology, TPM) to see whether and how TU Delft could play a role in the research programme.

How to keep control?

While walking around the campus, Lagendijk and Van den Hoven saw just how much artificial intelligence and autonomous systems was already being used. Applications included both pure software such as decision support programmes, as well as embedded programming in robots, virtually self-driving cars and in drones. Although the researchers they spoke to were enthusiastic, in-depth questioning revealed that most of them weren’t entirely sure how such an artificial brain worked. Or what the effects of errors in input or processing would be.

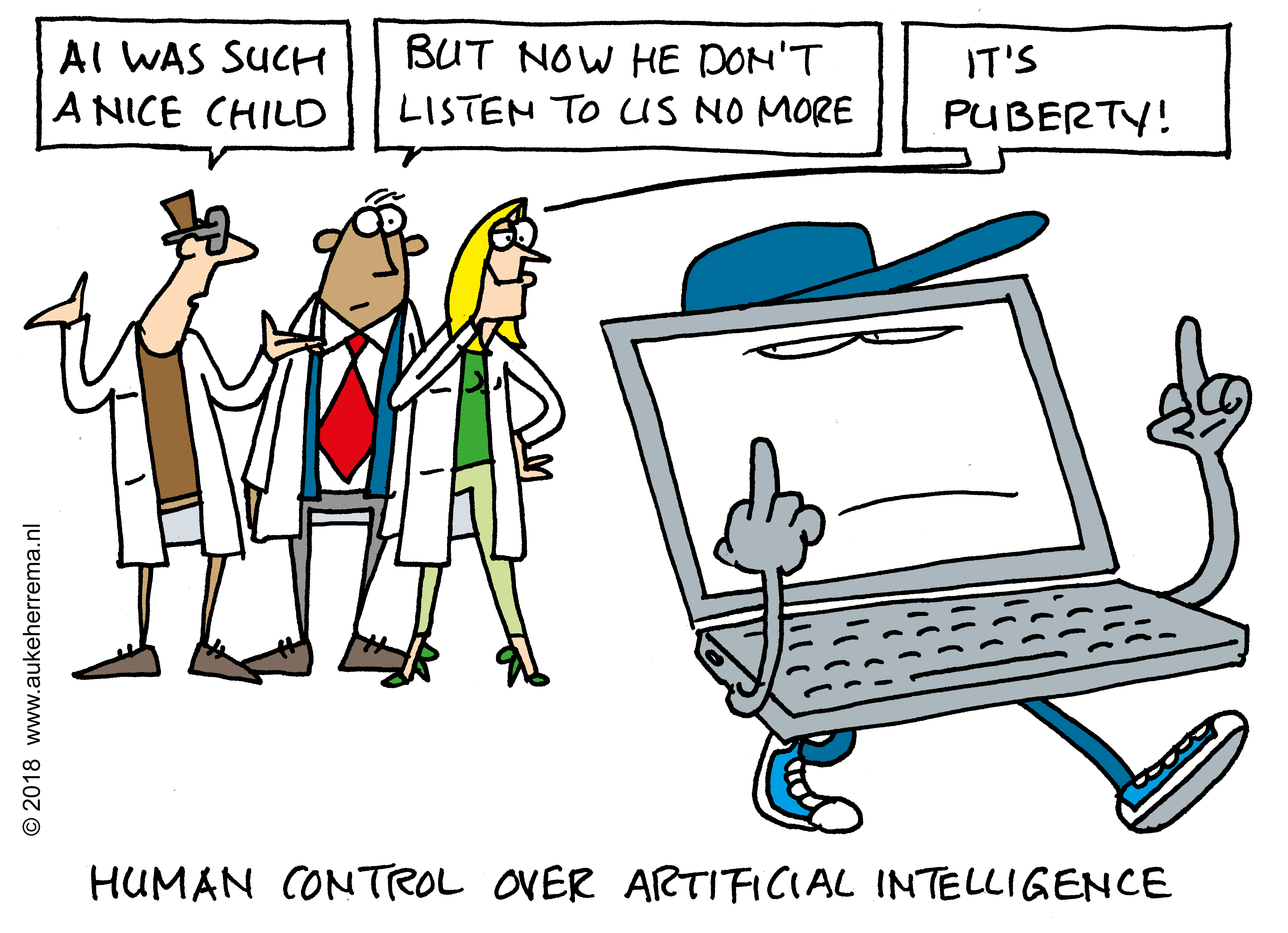

Lagendijk explains: “Everyone faces the same questions: how can I keep control? How do I understand what’s going on? How can I justify the decisions the system makes? All kinds of legal, ethical and social questions arise, which lots of people are working on. Institutes impose codes of behaviour along the lines of ‘thou shalt…’, but little attention is given on how to technically achieve it through research, design and engineering.”

This is now the focus of TU Delft’s contribution to the AiTech research programme: technical measures designed to safeguard human control of autonomous systems. The team was reinforced by the addition of Prof. Elisa Giaccardi (IDE) and Prof. Martijn Wisse (3mE), with Dr Luuk Mur as secretary.

AiTech

For the next two years, eight post-docs from the Faculties of EEMCS, TPM, IDE and 3mE will conduct research into the meaningful human control of artificial intelligence systems. They will divide their time between two faculties and the AiTech Centre based on the Construction Campus. Half of the € 2 million funding has been allocated by the Executive Board and the other half by the faculties concerned.

The researchers, who are currently being recruited, are asked to supplement their applications with a research proposal that meets three criteria. The project must contribute to raising awareness of human control of applied artificial intelligence. The researcher must draw up criteria for what the human control entails in this particular case, and be able to quantify it. Finally, the research must lead to a technical application.

The open strategy chosen by AiTech is known as ‘mission-driven research initiative’. Devised by the British economist Mariana Mazzucato as an innovation model, the programme focuses on a distant goal but allows researchers to plan their own path. Including, and particularly, when competing with each other. Lagendijk and Mur also stress that other people can take part in AiTech. The initiative is open to anyone connected with the field. The open character will probably take shape in lectures and other gatherings.

What results does Lagendijk expect in another two years? “I’ll be delighted if we have four or five examples in which we’ve defined meaningful human control more clearly and built it into technical systems.” TU Delft hopes that AiTech will give direction to government and corporate bodies intending to implement artificial intelligence, but perhaps unaware of the long-term implications. According to Lagendijk, this is the task of the university: “Not just to issue warnings; but also to develop solutions.”

- Who is responsible?

A bump in the road causes a self-driving car to make an emergency stop. It brakes so suddenly that the car behind hits it. Who is responsible? The company that built the road, the car manufacturer, the software developer or the driver of the second car? Questions like this will become increasingly important. Van den Hoven is promoting a system of tracking (knowing what the user wants) and tracing (working out what happened afterwards) to keep control of autonomous systems. Prof. Bart van Arem wants to apply these terms during the transition from driving yourself to self-driving.

- What makes him so special?

In the not-too-distant future, your electric car won’t automatically charge when you connect to a charging station. The capacity is insufficient and the cables aren’t thick enough. So who has priority? The person with a plus-subscription, the neighbour who’s a doctor, or the woman over the road who starts work early? How does the system decide, and how can you convince users that the system is acceptable and fair? This is the field of Prof. Elisa Giaccardi, who teaches interactive media design at IDE.

- A discreet helping hand

A navigation aid for people with visual impairment, that is flexible enough to help someone catch a bus one day and cross the road the next. This is an example of a Socially Adaptive Electronic Partner (SAEP), which Dr Birna van Riemsdijk (EEMCS) is currently working on. The underlying software must be flexible enough to adapt smoothly to deviations in unforeseen circumstances. Because you usually go home to sleep, but not always.

This article appeared in the October issue of Delft Outlook, the alumni magazine of TU Delft (pdf)

Do you have a question or comment about this article?

j.w.wassink@tudelft.nl

Comments are closed.