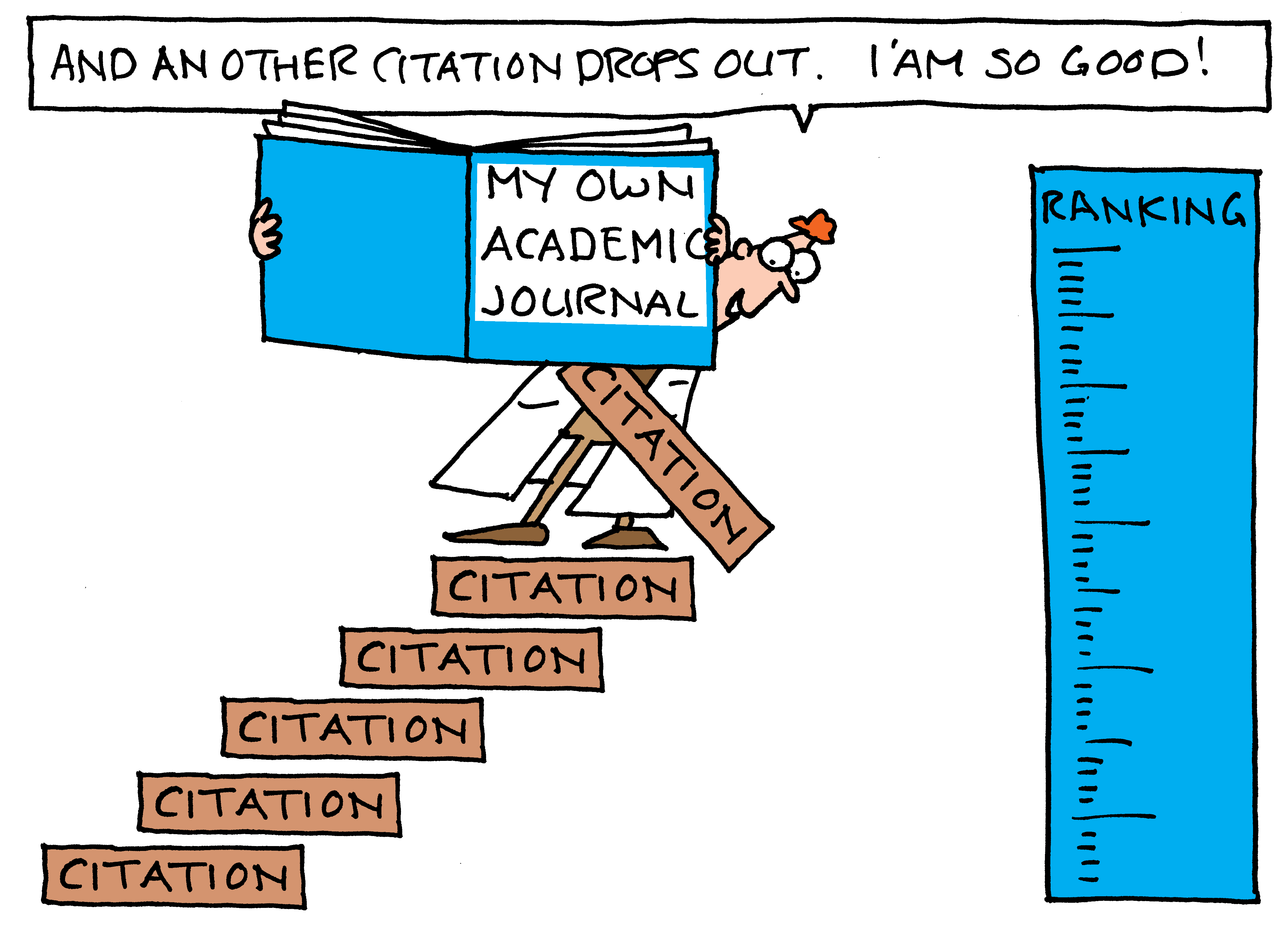

The Impact Factor is so important for journals that some of them use tricks to inflate it. An indicator has been developed to spot such fraud.

The Impact Factor of an academic journal is a measure of the number of citations to recent articles, and thus of its relative importance. Publishers became aware of it some twenty years ago. Since then the number of journal self-citations has increased to jack up the rankings.

Professor Caspar Chorus (Choice behaviour modelling at the Faculty of Technology, Policy and Management) and his colleague Dr. Ludo Waltman from the Centre for Science and Technology Studies at Leiden University have published an article in PLOS ONE in an attempt to reduce misuse of the impact factor.

The principle behind the manipulation of impact factors is simple enough. One only needs to increase the number of citations to recent articles (less than two years old) in one’s journal. To that extent, editors may ask, or force, authors to add citations to their own periodical. Better still, authors will spontaneously add more or less idle references from a journal to improve their chances of publication in it. Citation cartels also exist in which journals not only cite their own papers but also quote those from partners.

The indicator that Chorus and Waltman developed compares the share of journal self-citations in the last two years to that in the preceding years. They found that this ratio called Impact Factor Biased Self-citation Practices or IFBSCP, correlates strongly with coercive journal self-citation malpractices, which were reported in earlier surveys.

They computed their fraud indicator for all scientific journals with an impact factor, for all years since 1987. Up until the early 2000s, the indicator had a stable mean value of about 1.4. Since then, the value has rapidly increased.

The occurrence of many self-citations to recent articles is not necessarily fraudulent, Chorus and Waltman explained. For example, an author may may look for related articles in an interested journal and add citations as a service to the readers. So although a high IFBSCP score does not necessarily mean wrongdoing by the associated journal, the indicator does have a strong potential as a tool for the first diagnosis of malpractices.

Are the authors going to keep a fraud score of scientific journals? Chorus replied that the topic is too far outside his normal field of research to keep track.

Waltman, who studies the practices of science, said, “We are not going to make yearly reports. Our article is a contribution to the discussion about impact factors that has been going on between scientists and publishers for twenty years. Over the last two years, however, we have seen constructive steps in how to restrain citation malpractices. In that context, our indicator may have a role to play.”

–> Caspar G. Chorus, Ludo Waltman, A large-scale analysis of impact factor biased journal self-citations, PLOS ONE, August 25th, 2016, DOI:10.1371/journal.pone.0161021

Comments are closed.